Creation of AI Recognition Models for the B2B Marketplace «Platferrum»

Out of all the AI implementation cases in priority industries last year, 5.7% were related to digitalization projects. At the same time, there is a trend towards the development of B2B marketplaces: marketplaces have already captured 70% of the online trading share in B2C, and now this trend has reached the business sector as well.

Platferrum is a metal products marketplace that combines both trends. In this case study, we will discuss how we helped implement AI-based recognition models and how many types of iron products the AI now recognizes.

Customer

Platferrum is a marketplace for the sale of metal products. The service launched in October 2022 and became the first project of its kind.

Problem

The Platferrum marketplace is a space where various methods of filling out product documentation meet: each supplier describes their product in 1C or another system in the way they are accustomed to and find convenient. As a result, the Platferrum team encountered a wide variety of ways to describe the same product. For example, rebar can be recorded as “rebar,” “reb.150,” “rebar 150,” “steel reb. 150,” and so on. In the initial months after the launch of Platferrum, the project team managed to edit and normalize product cards manually, with content managers processing them.

However, when the number of suppliers exceeded 70 and the number of offers reached 20,000, there weren't enough hands to manage the workload. Asking suppliers to use consistent terminology would interfere with their business processes and risk losing their loyalty. The convenience of suppliers is a priority for the marketplace: if there are no suppliers, there will be no product assortment, and without an assortment, there will be no buyers. As the number of suppliers grows, so does the volume of new data, while the system's throughput decreases.

There are three potential solutions to this problem: hiring new content managers, which would lead to increasing labor costs; engaging the project development team to implement AI independently, which would consume valuable resources from the IT department; or outsourcing the task.

Task

The SimbirSoft team has previously assisted with the implementation of tasks for the platform, so Platferrum reached out to us with a request to develop two ML (machine learning) modules:

The product description recognition and matching module is designed to analyze purchase requests for metal products from suppliers. It searches for product descriptions and quantities within these requests, matches the identified supplier product cards (KTP) with the corresponding reference cards (EKT) from the database, and determines the product category. If the product can be recognized, the supplier's card is added to the database and linked to the reference card. If not, a decision is made to add a new reference card.

The table recognition module for PDF documents extracts information for scoring from the financial reports provided by suppliers in PDF format. Typically, clients' financial reports are presented in tabular form, so the AI task for scoring is divided into two stages: table recognition and searching for the required data within those tables.

Challenges in working with the nomenclature of metal products

When we began working on the module, there were already about 120,000 KTPs in the database, matched either manually or algorithmically through configuration preparation for each supplier. Creating configurations is cumbersome and involves unnecessary manual labor, so implementing the automatic matching module was the only optimal solution.

Each KTP and EKT contains a brief text description and a set of specific attributes that characterize the product.

Card Description:

“Electric welded straight seam pipe 18x1x6000 AISI 304 GOST 11068-81”

Attributes:

“Electric welded straight seam pipe” — product category

“18x1x6000” — size

“AISI 304” — steel grade

“GOST 11068-81” — GOST standard.

The task of recognizing KTPs is not straightforward because the order of attribute recording can vary, and each product category has its own set of attributes. Some data may be missing, and the way attributes are recorded can differ.

Additionally, words in these documents are often abbreviated, the list of products is simplified (for example, product names may be combined), and errors are frequently made.

Matching cards is complicated by the fact that there are about 30,000 reference cards in the database with very similar product descriptions. Sometimes the difference between descriptions comes down to a single character, which usually pertains to size, GOST, or steel grade.

There may also be duplicates and cards with incorrect descriptions in the database.

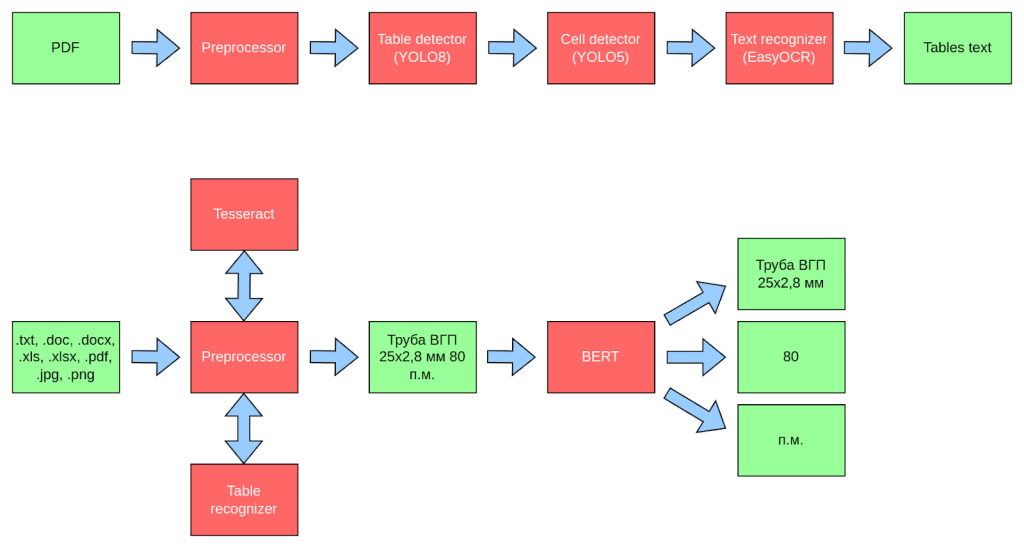

The task is further complicated by the different formats of requests from suppliers: text files (.txt), documents (.doc, .docx, .xlsx, .xls), images (.jpg, .png), or even .pdf files.

Examples of Text Requests

“Beam 20Sh1 STO ASCM 2093 (C245) L=5370 7 pcs”

“Pipes (detailed list in the attached file) contractual volume”

“NKTP pipe 102x6.5x9500 mm with coupling and thread grade D steel 20 GOST 633-80 1.5 tons”

“Used pipes with an internal diameter of 340 mm, wall thickness not less than 12 mm, contractual volume”

“Need pipe scraps, used, clean, restored, stored without ovals, dents, ellipses, without insulation.x000D

108*5mm-1m, 108*9mm-1m, 219*6mm-1m, 219*9mm-1m, 530*8mm-1m, 530*12mm-1m, 820*10-1m, 820*12mm-1m, 1220*12mm-1m, 820*19mmx000D

1m,x000D1220*12-1m, 1220*19mm-1m contractual volume.”

Each format required a special approach:

- If the request is in text form, it may contain additional information about the supplier, not just a product description. To filter this out, intelligent text processing is needed.

- If the request is an Excel file, it is necessary to extract the required data from the tables. This requires accurate translation of tables into text format without disrupting the document structure.

- If requests are presented as images, they are usually scans or photos of requests that need to be recognized and converted into text.

- If the request was sent as a PDF file, it could be either a scan or text. Here, both image and text processing are required.

- It was essential to train language models to understand the nuances of production nomenclature.

Solution

We approached the problem of recognizing products in text requests as a named entity recognition (NER) task. In our case, this involves product descriptions, their quantities, and units of measurement.

Any NLP model can be trained to solve such a task. We chose BERT-like Russian language models such as rubert-tiny2 and sbertlargenlu_ru because they offer a good balance of performance and quality. Additionally, we involved large language models (LLM) like YandexGPT and LLama for this task. For YandexGPT, the main challenge was finding a universal prompt, while for LLama, the high computational resource requirements and integration difficulties with the existing infrastructure posed significant hurdles.

To train the model, we annotated a special dataset containing about 4,000 text requests from various suppliers. To combat overfitting, we applied preprocessing and data augmentation methods, dropout regularization, and early stopping.

The model training was based on PyTorch, PyTorch Lightning, and Transformers. We chose the PyTorch ecosystem due to its popularity, availability of Russian pre-trained models, and large community support.

Requests can not only be in the form of ready-made text but also in file formats, so we needed to implement parsing mechanisms for them. For this purpose, we used several Python libraries and tools:

-

.doc, .docx - docx2txt, python-doc, LibreOffice

-

.xls, .xlsx - pandas

-

.pdf - pymupdf, pdf2image

Documents may contain not only text but also images, such as snapshots of requests with various annotations or scans. Therefore, the text must first be recognized by an OCR model before being fed into the NLP model. For this, we used the popular text recognition tool Tesseract due to its relatively high performance, even though it is less accurate than other alternatives like EasyOCR.

Requests are often presented in the form of tables, where each row contains the product name, while quantities and units of measurement are spread across separate columns. In such cases, the application of an NLP model is not necessary because we can identify entities simply by the column names, if they are present. Therefore, it would be very useful to have a tool for recognizing tables from images and scans, as they are often found in PDF documents, for example. We borrowed this functionality from the second module for scoring, described below.

As a result, the product recognition scheme was as follows:

The problem of matching KTP (Классификатор Товаров и Услуг) and ECT (Единый Классификатор Товаров) was addressed as a product classification task, where each product represents a separate data category.

Thus, if there are about 30,000 ECTs in the database, we will have as many categories. Within each category, a single ECT and all KTPs matched to it will serve as samples, since these are simply different ways of recording the same product.

To train the model, we used 120,000 already matched KTPs available in the database. It is not difficult to calculate that on average there are only about 4 examples per category — this is very few. Moreover, the frequency of occurrence for each product is distributed unevenly: some products, like steel sheets, are very popular, while others, like steel valves, have only one entry.

Therefore, to avoid overfitting the model, we filtered out rarely occurring products and applied data augmentation methods.

We trained the classification model in a classical way: we took the pre-trained rubert-tiny2 model, added a linear layer with an output size equal to the number of categories, and applied a softmax layer at the output.

However, before applying the linear layer, it is necessary to aggregate the output embeddings from BERT. For example, by combining averaging and max pooling. After training the classification model, we discarded the linear layer and found the nearest products based on cosine similarity between the embeddings output from BERT.

As a result, two modules were created that learned to recognize data in supplier cards, match them with reference cards, and not break on phrases that do not relate to the card, such as greetings or wishes for a good day.

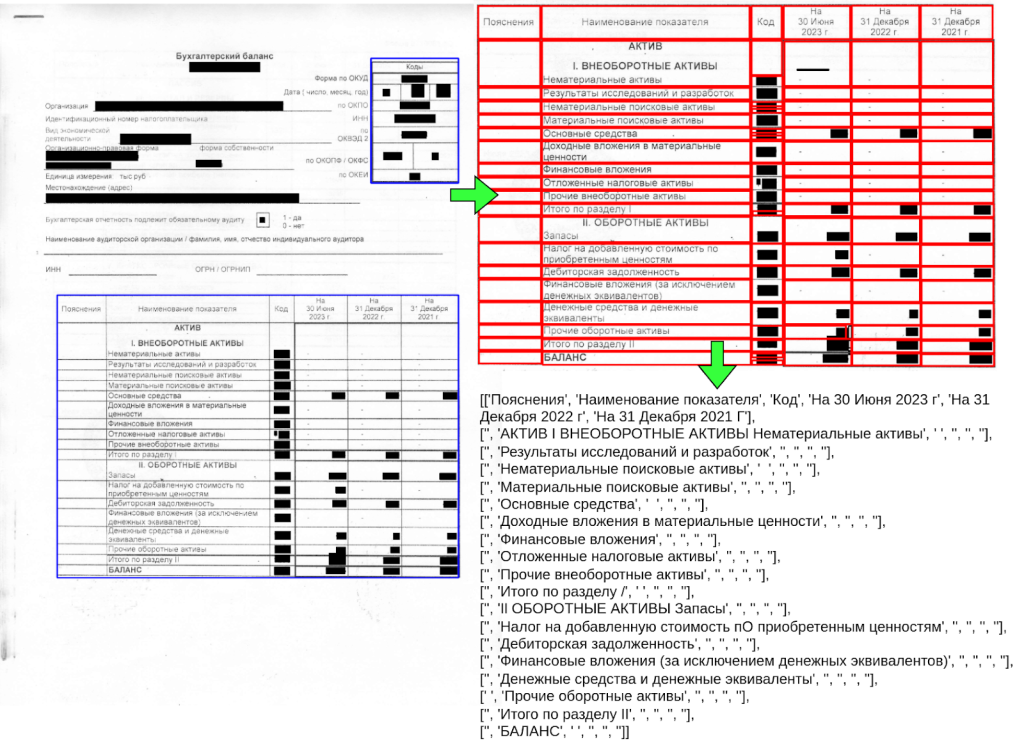

Searching for data in tables comes down to finding the column and row with the required code and textual description. The application of ML for such searches in this case is redundant and would lead to unnecessary errors; therefore, we focused on the task of table recognition.

Table detection with borders is a common task in computer vision, but here we faced an additional challenge: finding borderless tables and correctly recognizing the cells. Directly applying text recognition algorithms to the document is not feasible, as it is crucial to maintain the structure of the tables for subsequent processing. It is important to consider that the PDF format is complex; pages may have different spatial orientations and slight rotation angles, and documents may be readable without requiring recognition through ML. Therefore, additional preprocessing is necessary.

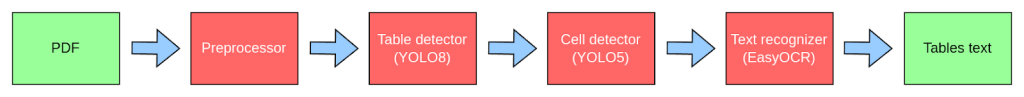

To solve the recognition task, we prepared and annotated a dataset that includes classes for tables and cells, and trained the corresponding detector models on it. As a result, we obtained the following pipeline:

1. Preprocessor — reads the input PDF document and performs preprocessing:

• Determines the type: scan or not

• If it is a scan, it splits it into grayscale page images, corrects the page orientation and rotation angle

• If we have a readable PDF (searchable PDF), parsing is performed using the pymupdf library, and the subsequent steps are skipped.

2. Table detector based on YOLOv8 — the model finds the boundaries of all tables in the document and determines their type: clear and unclear.

3. Cell detector within the table:

• If the table boundaries are clear, a CV boundary detection algorithm is applied, and no model is required.

• If the table boundaries are unclear, a cell boundary detector model based on YOLOv5 is applied.

4. Text recognition block within cells using EasyOCR consists of two models:

• A pretrained text detector based on the CRAFT model

• A pretrained text recognition model in Russian based on the Faster-RCNN architecture.

A significant problem became the low performance of the pipeline: processing a single multi-page PDF document with scans could take several minutes. The majority of the time was spent on the text recognition stage. However, we managed to achieve partial optimization and doubled the performance through tuning EasyOCR models.

During development, we noticed a peculiarity of the detector: the coordinates of the cells in the image were determined not in order from left to right and top to bottom, but according to the confidence percentage of the detector model. Once we understood that the detector worked this way, we created an algorithm to transform cell coordinates into row coordinates for analysis and message creation.

As a result, we developed a tool that allows processing any PDF documents containing financial reports and automatically extracting the necessary data from them.

Result

The modules are functioning properly and are handling the influx of suppliers, which has already exceeded 170. The assortment is increasing, attracting new users.

The growth of the platform without the implementation of AI and process automation would be several times more expensive; therefore, the use of artificial intelligence is no longer a trend but a necessity.

By 2030, according to forecasts from the Ministry of Energy, the share of enterprises using artificial intelligence in production will rise to 80%.

However, even today, in the context of a competitive market and a labor shortage, the implementation of AI is becoming a significant advantage.